Data Engineer Interview Experience at Media.Net (2023)

A journey from preparation to getting an entry-level data engineering job

Introduction

I joined Media.Net as a Data Engineer in July 2023. In this blog post, I’ll walk you through my journey from preparation to bagging an entry-level data engineering job.

Preparation

I decided to make a switch from my job at HashedIn By Deloitte around November 2022 and that’s when I started with the preparation.

Knowing that I have to target entry-level data engineering jobs, there were a few things I was aware of:

Data Structures and Algorithms questions asked in the DE interviews are comparatively easier than SDE jobs.*

A good grasp of SQL, Hadoop, and Spark is a must.*

A good understanding of previous work experience and tech stack is important.

Previous Work Experience

I started with revising the tech stack I was working with at that time which was Akka and Scala, along with the projects.

SQL

Then, I started with preparing for SQL questions. Here’s a list of resources I followed:

Leetcode: I solved most of the SQL questions here, along with the SQL study plan. Overall there must be around 100 questions that I solved at the time.

Ankit Bansal’s Youtube Channel: I solved SQL problems discussed in this channel for around 100 questions. It has SQL playlists which you can refer to.

8 Weeks SQL Challenge: This is a great challenge to use your skills to answer some questions based on specific use cases. I solved 3 challenges from this only since by this time, the concepts were getting repetitive for me.

Hadoop

Having solved a lot of SQL questions now, I moved on to learning about Hadoop. The resources I followed for this are:

Apache Hadoop Official Documentation: Yes, I read almost everything in the official documentation regarding HDFS, MapReduce, and YARN.

Data Engineering Youtube Channel: This channel has some well-explained videos on Hadoop and Hive.

Apache Spark

I was already familiar with Apache Spark API as I’ve done a Udemy course on this earlier. However, the internal workings of Spark were a bit unclear to me. So I read about Spark Internals from the official documentation and other multiple blogs/YouTube videos. Unfortunately, I didn’t find any one resource having a good explanation of all the things.

Tip: You can use the Databricks Community Edition to quickly run spark code instead of setting it up on your local machine.

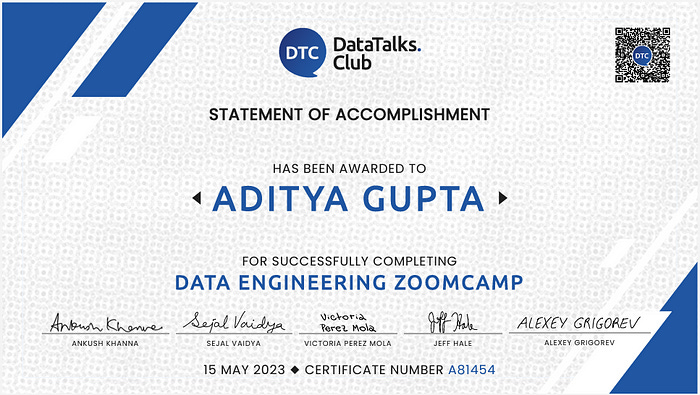

Data Engineering Zoomcamp 2023

By the time I completed learning Spark, it was January 2023 and I also completed a certification in AWS (not that it mattered here). Then I got to know of this Zoom camp which was open-source and taught practical data engineering from basics.

The idea seemed interesting and I enrolled in this. I spent 2–3 months completing assignments and the capstone project required for course completion.

Result? I got this End-to-End Capstone Project built and graduated the course successfully.

Applying for Jobs

I started applying for entry-level jobs while attending the Zoom camp itself since I felt confident about my SQL skills and Hadoop fundamentals. I may have applied to 500+ job openings by that time and got 0 replies.

But…during the entire time I was preparing, I was active on LinkedIn and Twitter sharing anything that I could regarding the things that I was learning and building, and I got some decent engagement from the community.

I started getting some recruiter reach-outs for data engineering jobs but most of them required 3–4 years of work experience that I was lacking. Around that time only, a recruiter from Media.Net reached out to me for an entry-level data engineering position!

And then I got on a call with the recruiter and started with the interview process.

Interviewing

In the initial call with the recruiter, we discussed my previous work experience, the technologies I have worked with, and the ones I don’t have actual work experience. To my surprise, Spark was not a mandatory skill for them, even when it was mentioned as one in the job description!

So I scheduled my interviews in the coming days…

Technical Round — 1

This round was with a Data Engineer and was a 1 hour round with 2–3 SQL questions and a DSA question.

The SQL questions were based on a case study to check the liability of customers to the banks based on their existing loans and bank account balances. I don’t remember the exact case study, but it used JOINS and CASE statements extensively.

The DSA question was based on Arrays and it was something I had solved previously so I gave both, the brute-force and the optimized approach for that.

Later that day I got to know that I’ve cleared this round and my next round is scheduled after a few days.

Technical Round — 2

This round was with a Lead Data Engineer and was a 1-hour system design round.

In this round, we discussed designing a system that stores large-scale data reliably and processes the data to solve the Two-Sum Problem.

This question consists of multiple parts:

How to store huge datasets in a distributed system?

How will the system handle failures in the nodes of the distributed system?

How will the clients read the data?

How will the mappers and reducers work to solve the Two Sum problem?

Though I was not able to answer the last question, I did answer the first 3 from whatever I have learned from the architecture of HDFS and MapReduce.

I wasn’t sure if I would clear this round. Two days later, I got a call that I had cleared this round!

Technical Round — 3

This was again a system design round with a Lead Data Engineer.

This round was heavily focused on my data modeling and SQL skills. Here’s the problem statement: We have a music company that is onboarding new artists on its platform. We want to recommend the songs of these new artists to users or a subset of them.

As you can guess from the problem, it is unclear. There are a lot of unknowns and a lot of possible directions this problem can go towards. 2 parts of this problem are:

Designing the data models for this company to store the data of users, artists, or any other entity.

How to handle the changes in the dimensions, or do we have to?

Finding the relevant users to recommend a given artist. (We have to write a SQL query for this part, nothing fancy)

Now, there are no right or wrong answers here. I kept discussing with the interviewer the approaches that I could think of, the questions I had, and anything I felt confused about. In the end, I did manage to write a long SQL query to get the list of users for a given artist based on the schema I designed.

The next day, I got to know that I’d cleared this round.

Technical Round — 4

This round was with the Director of Engineering and ran for about 1.5 hours.

I don’t remember the exact question but it was something along the lines of designing a system that can process a huge volume of real-time stream of messages. How will it run in a distributed manner, what if any of the nodes go down, what about fault tolerance, how to read from this stream, and many other things?

I could relate this problem to Kafka but I knew only the surface-level details of it. I was not sure how it handles repartitioning among its consumers or how it handles fault tolerance etc.

Nevertheless, I discussed the approaches that I could think of, and since I had experience working with Akka (a distributed, stream processing toolkit), I relied on its concepts like event-sourcing and gossip protocols for the discussion.

I got to know the next day that I’d cleared this round as well.

Hiring Manager Round

By this time, I’d already started the salary negotiations with my recruiter. So this round was just a chance for me to get to know the company and to ask any questions that I have.

This round was for 30 mins and went well.

Conclusion

Even though I did not get any replies from formal job applications, I did get recruiter reach-outs on Linkedin just by posting about my learnings 🤷🏻

If a job description mentions a technology that you don’t know, apply anyway. Don’t self-reject.

Interviews can be completely different from what you expect, so clarify as much as you can from your recruiter.

This was my experience getting that first DE job. It’s been around 6 months that I’ve been working at Media.Net at the time of writing this blog and I’ve learned a lot already!

Thanks a lot for reading. Let me know if you have any comments!